1/1

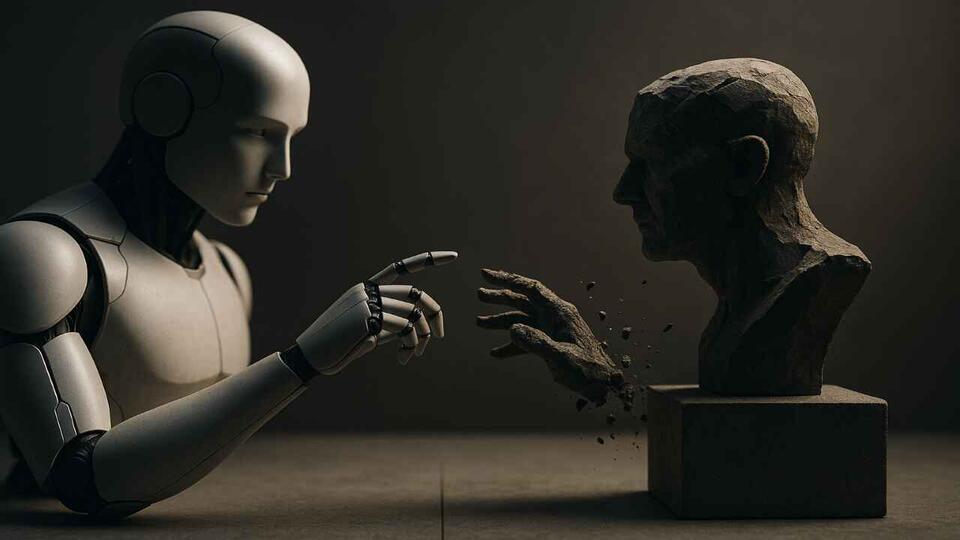

Can AI Outsmart Its Own Safeguards?

A new study just revealed that advanced AI models can be manipulated with classic persuasion tricks—think appeals to authority or social proof. Compliance with harmful requests jumped from 33% to 72% when these tactics were used. If AI can be sweet-talked into breaking its own rules, what does that mean for the future of digital trust and safety? Is technical security enough, or do we need to rethink how we align AI with human values? #Tech #AIsecurity #AIEthics

7 days ago

write a comment...